Quality metrics

min de lecture

Quality Calibration Sessions: Best Practices Guide

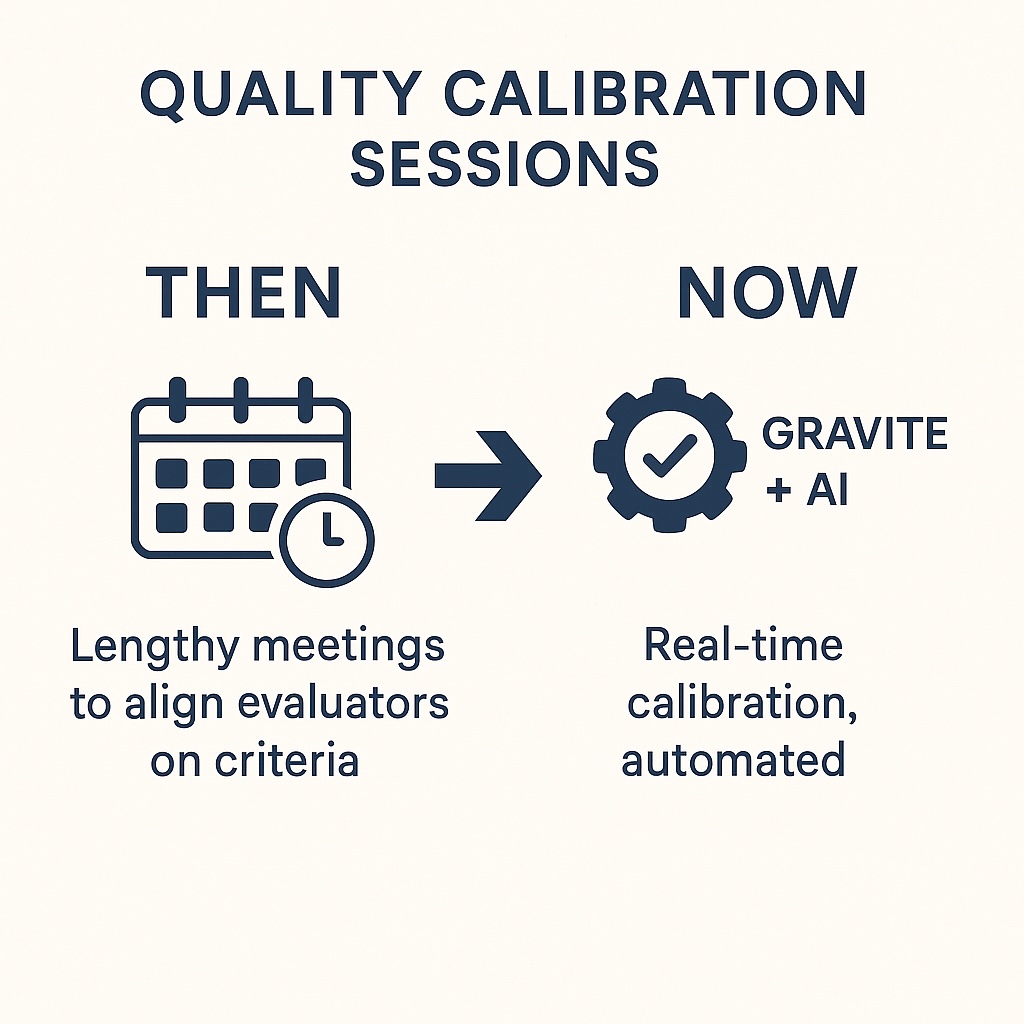

Back then, aligning evaluators took hours of meetings, spreadsheets, and debates. Every month, QA leaders would run lengthy calibration sessions just to make sure everyone interpreted the scoring criteria the same way. Today, with Gravite and AI, calibration happens in real time. AI evaluates every interaction against your criteria and measures evaluator alignment continuously.

Calibration sessions still matter for the human side — but they’re now lighter, faster, and laser-focused.

In this guide, we’ll show you:

- How calibration used to work.

- Why it’s still valuable for trust and training.

- How Gravite’s AI removes the heavy manual workload and keeps your team aligned automatically.

1. Why Calibration Used to Be Critical

Traditionally, calibration was the only way to keep scores consistent across evaluators.

Without it:

- Each evaluator applied the guidelines differently.

- Agents felt judged unfairly.

- Managers struggled to trust the QA data for coaching or performance decisions.

-

Calibration was the glue that held the QA process together.

2. The Old Way of Doing It

For years, calibration sessions looked like this:

- Monthly or bi-weekly meetings of 1–2 hours.

- Everyone scored the same set of calls beforehand.

- During the meeting, the team compared scores, debated, and agreed on an “official” score.

- QA guidelines were updated based on the discussion.

This process was time-consuming and tedious, but necessary.

3. How Gravite + AI Change the Game

With Gravite, there’s no need to wait until the next session to find out if evaluators are aligned:

- The AI analyzes 100% of calls, emails, and chats in real time.

- It compares human scores to its own model and flags inconsistencies instantly.

- Managers see which criteria cause misalignment and with whom at any moment.

- Adjustments can be made on the fly, so calibration sessions become short and highly targeted.

👉 The result:

- Fewer, shorter meetings.

- Continuous alignment between human scorers.

- More transparency and fairness for agents.

4. Why Humans Still Meet

Even with AI, live sessions are still valuable to:

- Train new evaluators on the company’s quality culture.

- Clarify subjective criteria like empathy or tone.

- Build trust between QA, managers, and agents by openly discussing scoring decisions.

These sessions now run every 1–3 months, lasting about 1 hour, and focus only on cases where human and AI scores diverge.

5. A Modern 60-Minute Session Structure

- AI pre-analysis – Gravite surfaces the interactions with the largest human vs. AI disagreements.

- Quick review – Focus only on 5–8 flagged cases instead of dozens.

- Targeted discussion – Debate just the criteria that caused the differences.

- Consensus & updates – Adjust the QA guidelines directly inside Gravite.

- Wrap-up – Document changes; updates go live instantly across all evaluations.

6. Real-Time Benefits

- Continuous consistency: no waiting weeks to fix misalignments.

- Time savings: managers spend more time coaching, less time in meetings.

- Higher acceptance: agents see fairer, more consistent scoring.

- Less bias: the AI acts as a neutral benchmark.